2025年12月13日Anthony.KimEnglish

Caret Router: Why We Moved from LiteLLM to any-llm

"LiteLLM was powerful, but for Caret it was an overly heavy and complex router. any-llm, with its lean, API-first design, let us extend only

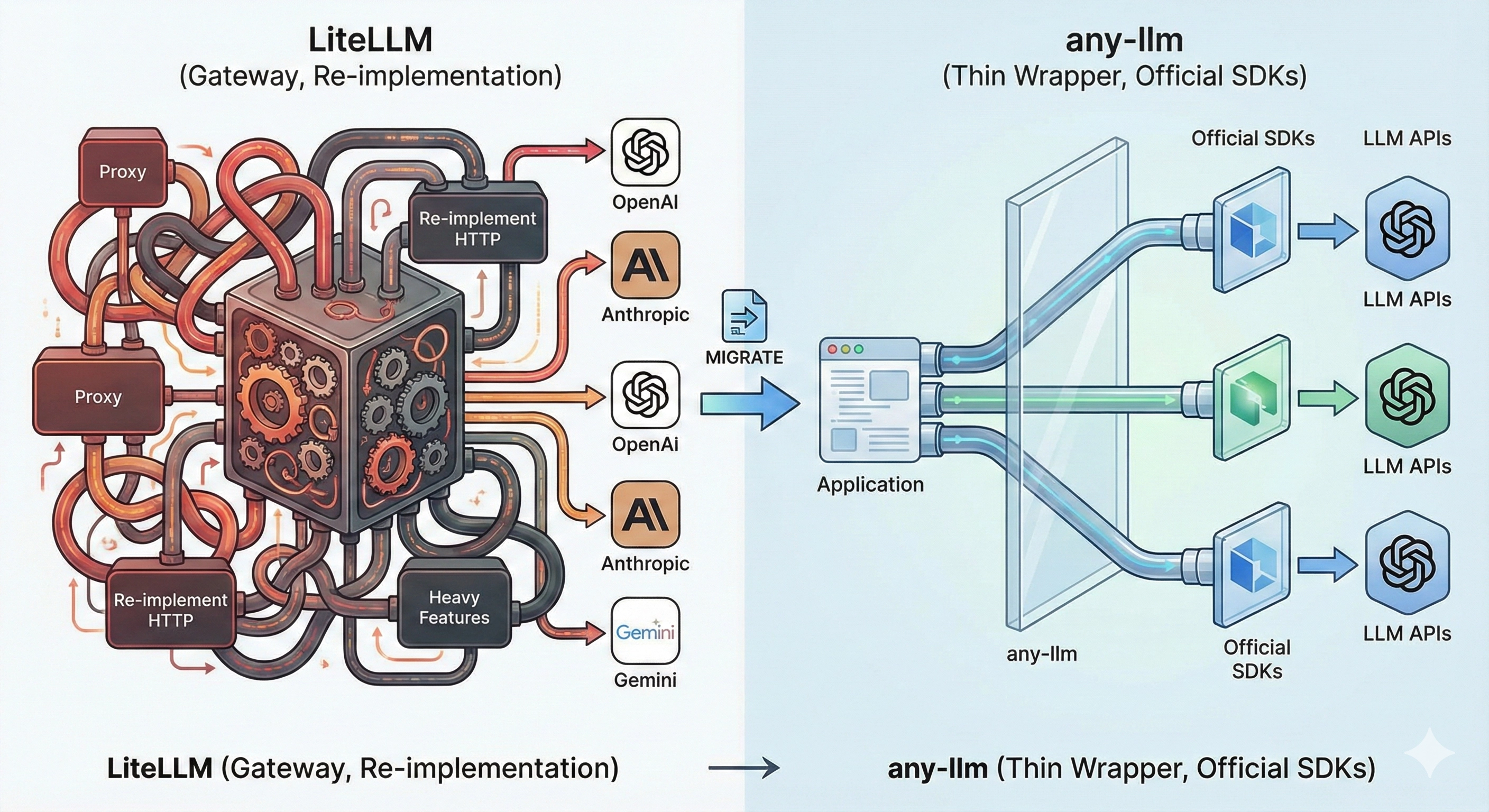

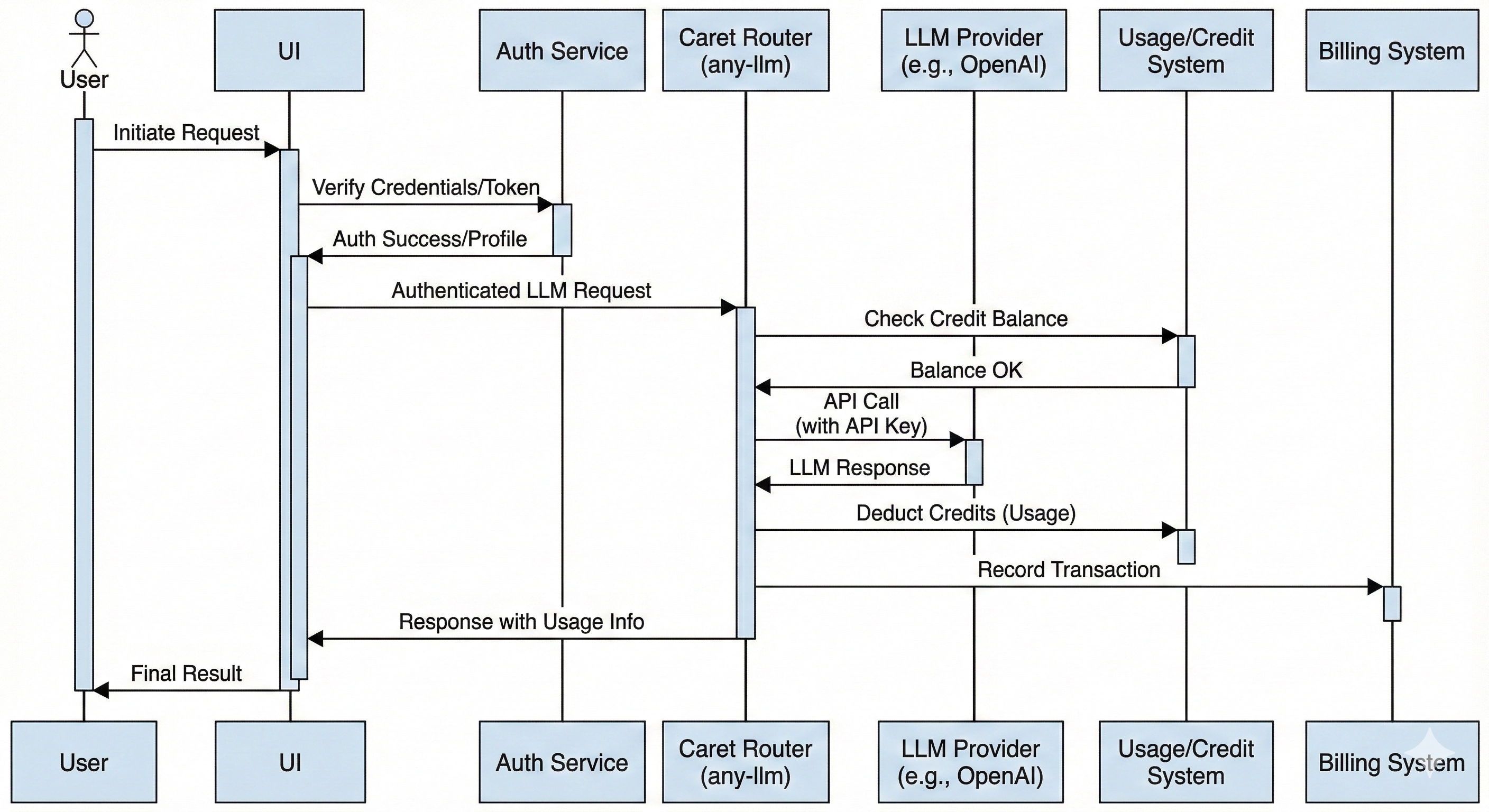

When designing the LLM router at Caret, the most important thing for us was “simplicity we can control.” Models keep multiplying, vendors change, and requirements grow — and we’ve learned more than once that if the router becomes heavy too, operational complexity ends up eating product velocity.

We used LiteLLM for a while. Functionally, it’s excellent and very well-built. But over time it became clear that parts of it didn’t fit where Caret was headed or how we operate in production. So we switched our router from LiteLLM → any-llm.

This isn’t a “comparison review,” but a record of why we switched — and what became possible after the switch.

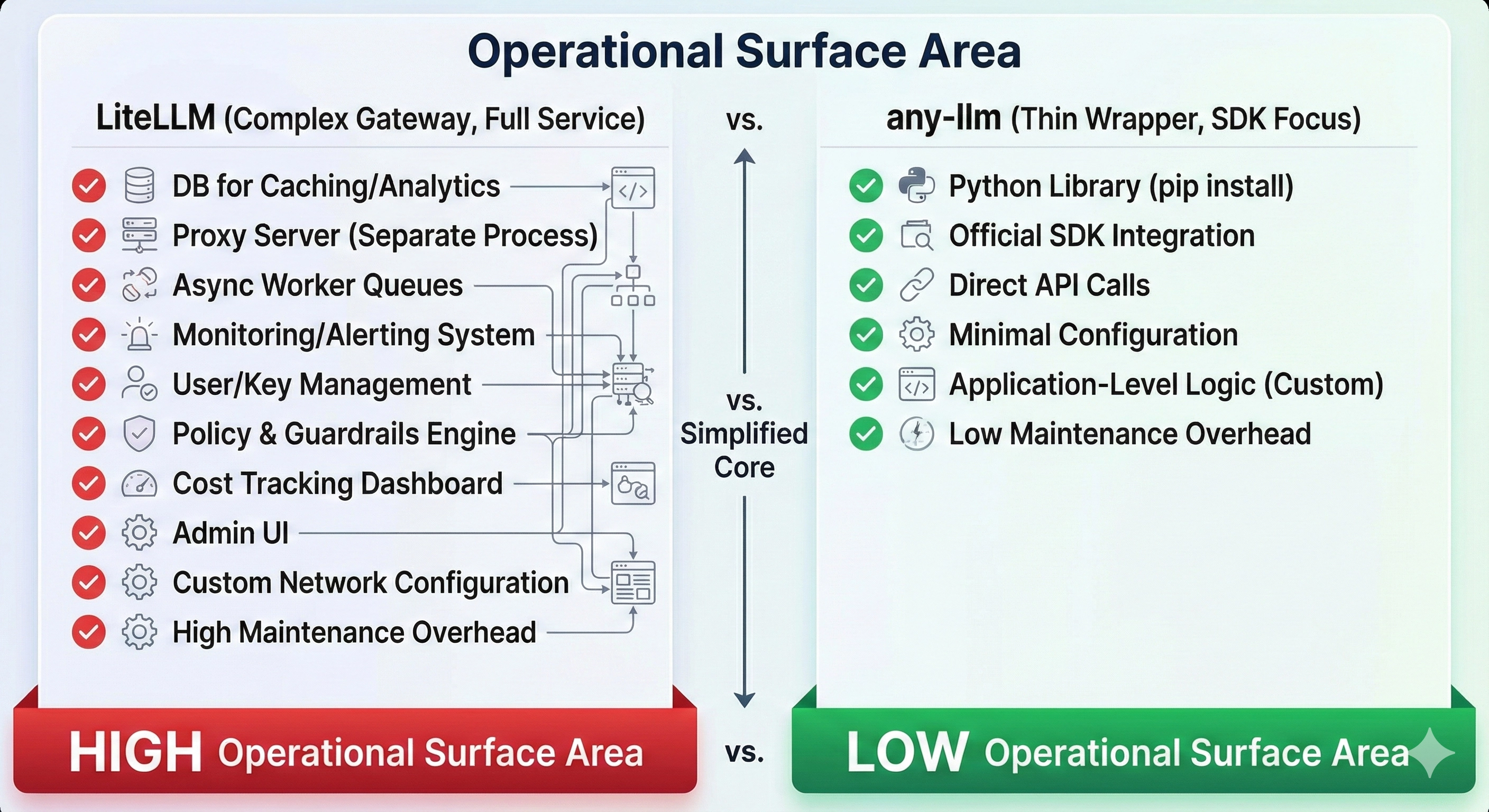

1. LiteLLM Was Too Complex, and Caret Didn’t Need Many of Its Features

LiteLLM is closer to an AI Gateway / Proxy Server than a simple SDK. Multi-tenancy, virtual keys, org management, budget controls, logging, alerts, database integrations… In “enterprise environments,” these are undeniably powerful advantages.

The problem is that Caret’s router didn’t need that kind of feature set.

- Changing the router dragged in a DB, worker setup, and operational parameters

- We had to maintain a structure that included features we didn’t use

- In the end, “operating the router” becomes more important than “routing LLMs”

As a result, LiteLLM became a heavy choice for Caret not because it lacked features, but because it had too many.

2. any-llm Was Lean, and Easy to Extend

The biggest reason we chose any-llm is simple.

“A router should be thin.”

any-llm is fundamentally a library focused on a clean API interface.

- It doesn’t force you into a proxy server

- It focuses on abstracting model calls

- The structure is simple, so it’s easy to understand at the code level

This isn’t just about being “lightweight” — it means we can embed it naturally into our service architecture.

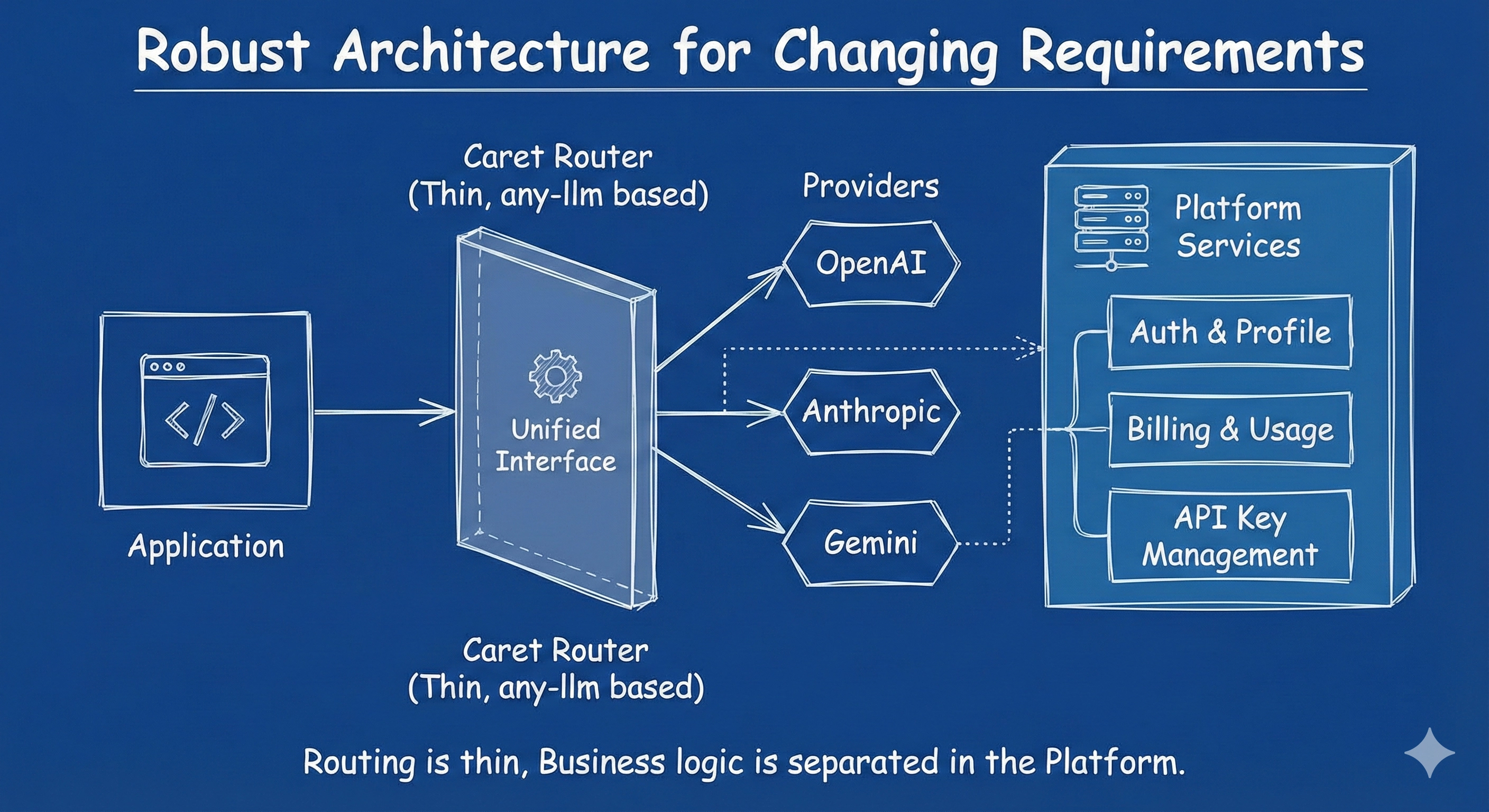

For us, wanting the router to be an internal component of the Caret platform rather than a standalone service, this was decisive.

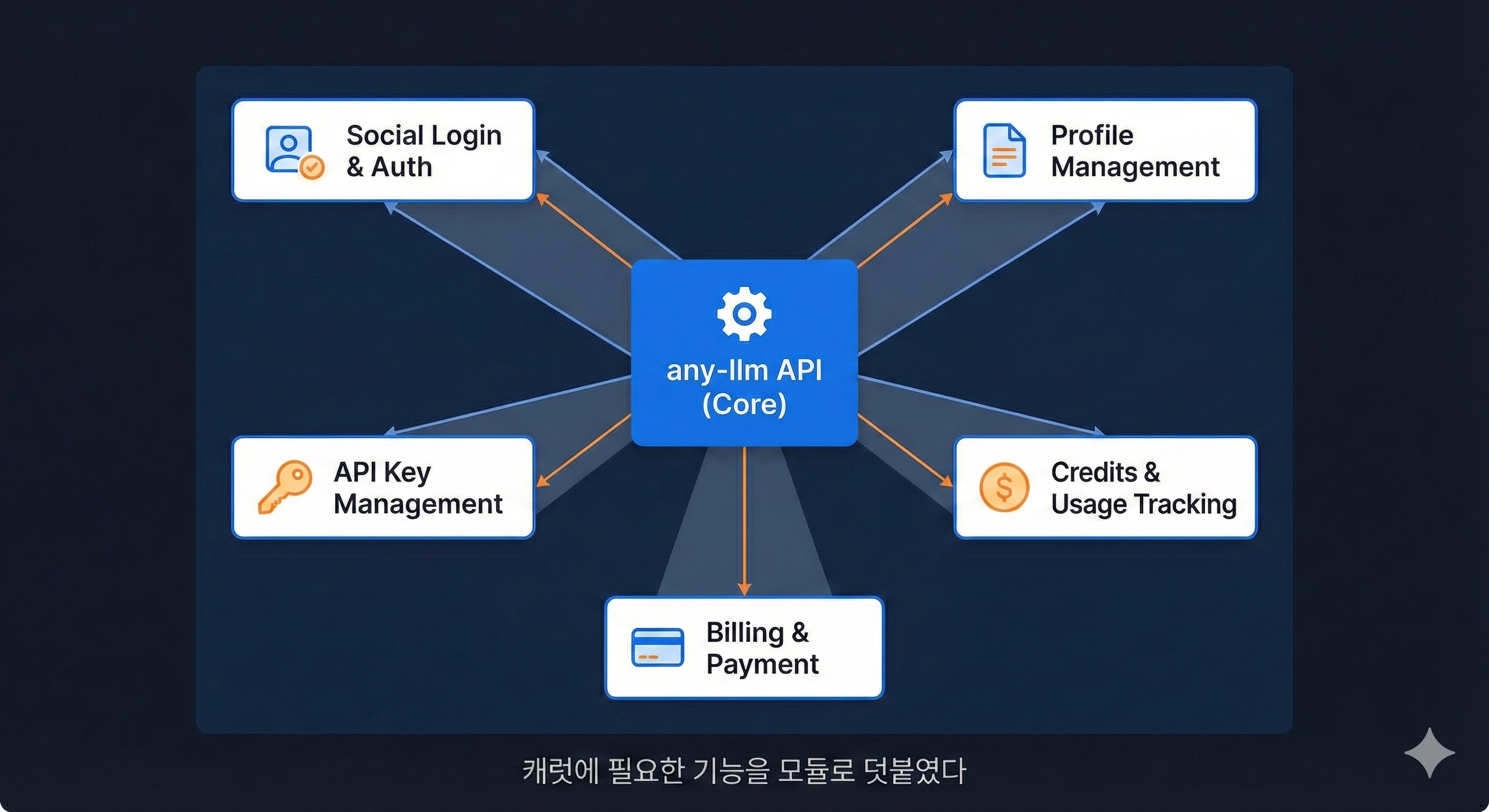

3. We Could Extend What any-llm Doesn’t Provide, in a Way That Fits Caret

One of the hardest parts of using LiteLLM was “fitting Caret into a structure that was already decided.”

After moving to any-llm, our approach changed completely.

The router routes — we build everything else.

As a result, we could add features that aren’t in any-llm but are essential for Caret — in our own way.

- Profile management

- Billing / payments

- API key management

- Credit management

- Social login & authentication

These features shouldn’t be enforced at the router level; they needed to integrate naturally with Caret’s business logic.

Thanks to any-llm’s simple API structure, this kind of extension became much easier.

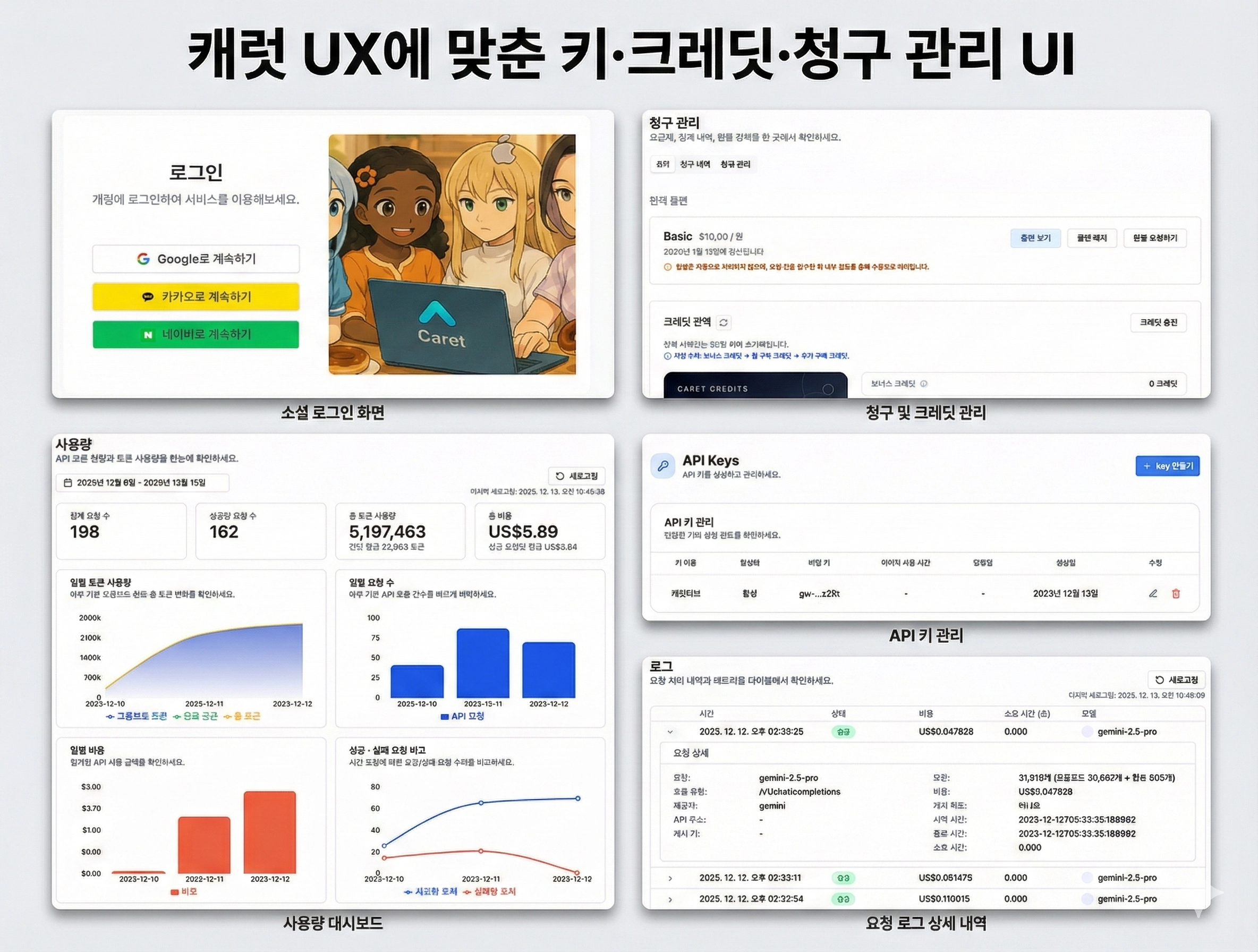

4. Because any-llm Only Provides an API, We Could Build the UI Completely Freely

LiteLLM is closer to a “platform that includes an admin UI.” But Caret already has its own UX and design philosophy.

any-llm doesn’t impose any UI at all.

- Provides only an API

- The service decides the UI/UX entirely

That let us design:

- A UI aligned with Caret’s user flows

- Screens where credits, payments, and key management connect naturally

- An experience where LLM features feel like “tools”

— without constraints.

Instead of the router defining the UX, the product wraps the router.

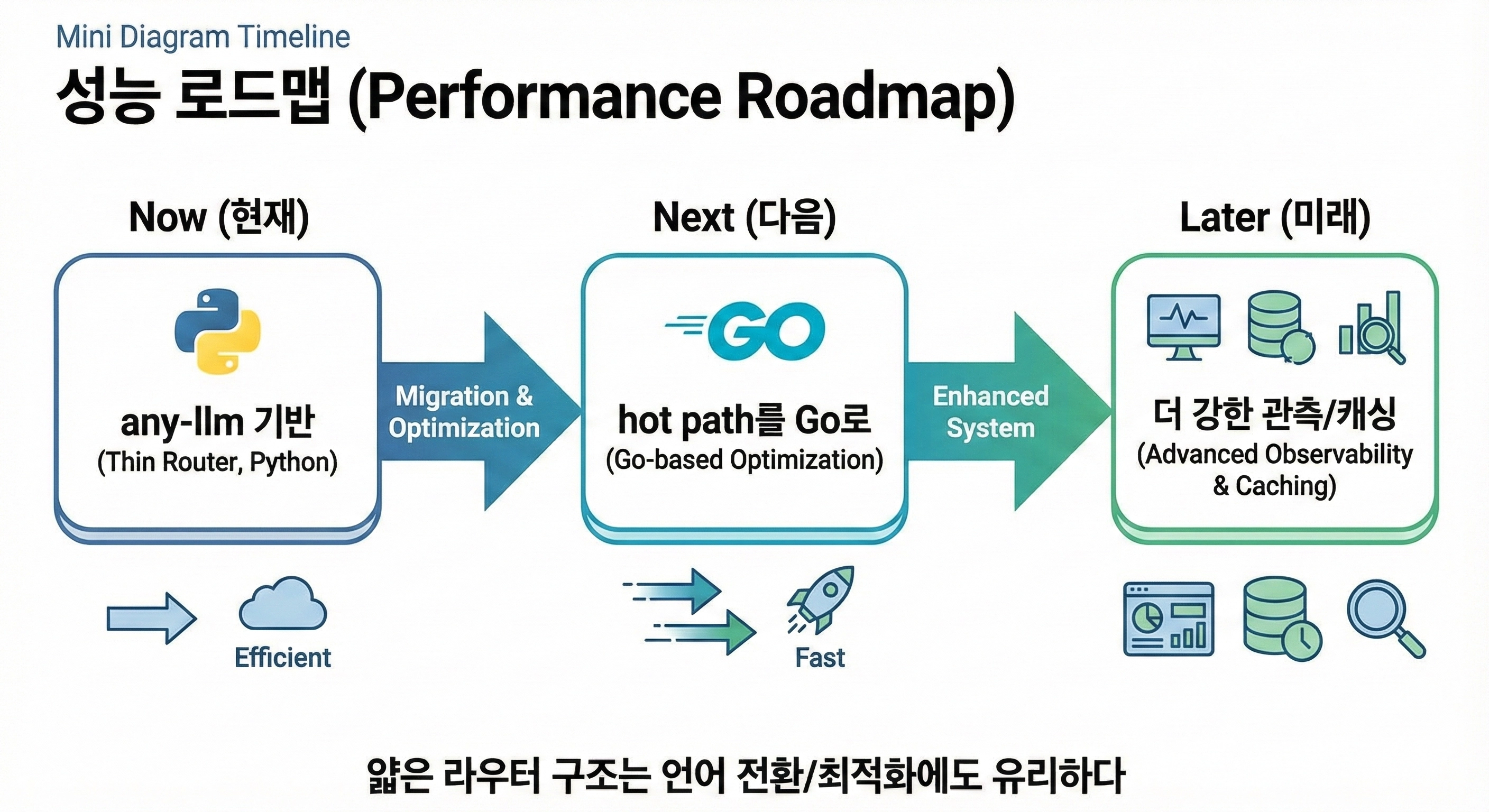

5. any-llm Also Makes It Easier to Improve Performance with Go Later

In the long run, Caret cares about performance and cost efficiency. The router layer in particular is where traffic tends to concentrate.

Because any-llm’s structure is simple:

- It isn’t locked into a specific language/runtime

- Rebuilding it in Go — or swapping out parts with Go components later — is a realistic option

That would have been a harder decision if we were tied to a design that assumes a heavy proxy server. With an any-llm-based structure, we can keep our performance strategy flexible.

6. We’ll Open-Source It Once It’s Cleaned Up

As we moved from LiteLLM to any-llm and added Caret-specific functionality ourselves, one thing became clear.

This structure is too good to keep to ourselves.

So we’re currently cleaning up the code, and once it’s ready we’ll release it as open source.

- An any-llm-based routing architecture

- The extension patterns we actually use in Caret

- Operational learnings from running it

We’re looking for developers who want to think and build together. Rather than a “finished framework,” we hope it becomes a foundation we grow together.

In Closing

This switch isn’t saying “LiteLLM is bad.” LiteLLM is still a great tool, and a good fit for many teams.

But for Caret, we needed:

- A thinner router

- More freedom to extend

- Clearer separation of responsibilities

And any-llm was the option that matched those needs.

What this choice leads to will become clearer through ongoing operations and the open-source process.

We’ll let the code do the talking soon.

More posts

In the AI-agent era, we believe what developers and enterprises need is not a comforting companion, but an equal partner that helps teams achieve outcomes. Here’s how we think about Caret and Caretive.

— Graph RAG, Knowledge Graphs, and Semantics-Based AI Architecture